We can then imagine that this same friend's nervous system might be manipulated (whether through mutation, injury, or pharmacological manipulation) to prevent them from feeling pain. While we might initially be shocked at such a turn of events, we could be convinced of such a change if our friend stopped responding to usually painful stimuli (such as our villain the stove-top) with the same clear "pained" reaction. So changing the nervous system clearly changes whether or not the individual can feel pain, leading us to believe that pain is something the brain does.

|

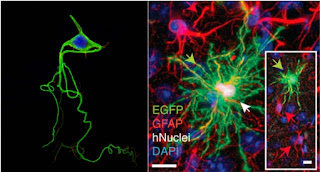

| Pain is something the brain does- but how do we know when it is doing it? Image from here. |

However, in addition to our verbal friend, there are an untold number of animals, (including other types of humans), plants, virions, rocks, alien beings, artificial intelligences, puppets, and tissue cultures whose internal states aren't as readily apparent. Whether it is that they can't talk (as in the case of the bulk of that list) or we suspect that their speech might not be truthful (as in the case of artificial intelligences or puppets), we must rely on other, perhaps subtler methods of inferring their internal state. Holding off on the very important question of how we should evaluate a creature's pain, let us instead focus on evaluating how we currently determine if a strange being is in pain or not. To simplify the problem significantly, let's focus on how this process occurs in the present day United States. While even in this limited scope we see substantial debate over what counts as pain in non-human animals, there is at least one place where some sort of official, if temporary, compromise is forced into existence: animal cruelty law. Can US animal cruelty laws provide a formal definition for how Americans think of pain?

The answer is a bellowing, headache-inducing “no.” Besides the fact that animal cruelty laws are all over the map, they also assume the reader already knows what terms such as 'torture,' 'cruelty,' and 'abuse' mean. [1] Wording is such that the legality of acts such as killing an animal - which includes euthanasia - are dependent on whether or not the human performing the act was acting with 'malicious intent,'[1] rather than specifying behavioral or physiologic indicators of pain in the victim itself. Even the definition of 'animal' can be horribly vague and ancient-sounding - California's, for instance, is “any dumb brute,”[2] while Alaska excludes fish from their definition. [3] And then there are the built in loop holes - activities such as hunting, fishing, pest control and agriculture are often explicitly excluded from prosecution under these laws. [4]

If the laws themselves are vague and variable, perhaps we can pass the buck onto the courts to determine what the 'official' definition of pain is in practice. The American Prosecutors Research Institute published a guide [5] to prosecuting animal cruelty cases in 2006, which focuses on motivating the prosecution of these crimes (as indicators of future criminal activity [6], or as “broken window” crimes that can reduce the public trust of law enforcement if they go unchecked, in addition to violations of animal welfare), as well as describing the types of cases, and resources available for prosecutors. Though prosecution might focus on determining very anthropocentric factors such as how much the criminal act represents the potential for harm to the (human) community at large, there are several recommendations on ways to argue for the amount of pain and suffering inflicted into the victims of animal cruelty. First among these techniques is to consult with a veterinarian, who being “among the most respected members of the community” can offer expert “opinions regarding the speed of unconsciousness or death, and the degree of suffering to evaluate whether the death or killing was humane.” [5]

|

| Image from here |

So the legislators have given a vague direction to the courts, and the courts hand the technical questions off to the veterinarians. How do the vets determine if an animal is in pain? Even after narrowing our scope to the veterinary community, we see substantial variation in how pain is viewed and treated. While "an unpleasant sensory and emotional experience associated with actual or potential tissue damage, or described in terms of such damage[7]" is often cited in medical contexts, how does this translate to actual diagnosis in a veterinary setting? One approach is to see what behavioral and physiologic changes are associated with major tissue damage, as in the case with surgery, and then identify how accurately such measures can be used to deduce if an animal was provided with analgesia following such a stimulus. If a measurement (such as heart rate, subjective measures of posture, amount of locomotor activity, wound licking) leads to accurate indications of the presence or absence of known pains, and is reliably measured by multiple experimenters, than the measurement is considered indicative of pain[8]- though confounds can occur[9]. Additionally, behavioral measurements may be selected for evaluation based on common practical knowledge or past experience with the organism[10]. It should be noted however that Dr. Patrick Wall, one of the world's most respected pain researchers, admonished veterinary practitioners to abandon the idea of an intrinsically moral animal pain. Wall asks vets, “instead of agonizing over an undefinable concept of pain, why do we not simply study the individual's efforts to stabilize its internal environment and then aid it, or at least not intrude on those efforts, without good reason?”[11]

This is hardly an exhaustive depiction of all the ways pain can be construed, even when limiting ourselves to American veterinary practice. We haven't found a clear ground to seat pain on, though certainly behavioral changes, nociception, and similarity to human responses are all good starting points. Going back up to the courts and the law, we find that in practice this notion of animal pain is further modulated by tradition and human intention when determining if the pain is considered 'bad' or not. The 'clearly' neural and moral pain we see with humans becomes cloudier as we move away from humans and into other entities, becoming more and more troublesome as the entity becomes stranger and stranger. While we might expect (or at least hope for) direct neural measures of pain to sharpen this very fuzzy working definition I've sketched above, we should remember that the 'ground truth' for pain, the facts that we build more nuanced definitions off of, are human behavioral responses (such as the response to the stove-top). We can look for similar behaviors, or similar neural correlates of these behaviors, in non-human animals, but both of these are correlative indicators. Until we specify (or agree upon) what exactly about pain makes it moral [12], and then find the behavioral or neural causes of that aspect, such correlations are all we will have for determining pain.

[1] West's Annotated California Codes. Penal Code. Part 1. Of Crimes and Punishments. Title 14. Malicious Mischief. § 597. Cruelty to animals

[2] West's Annotated California Codes. Penal Code. Part 1. Of Crimes and Punishments. Title 14. Malicious Mischief. § 599b. Words and phrases; imputation of knowledge to corporation

[3] Alaska Statutes. Title 11. Criminal Law. Chapter 81. General Provisions. Section 900. Definitions.

[4] West's Code of Georgia Annotated. Title 16. Crimes and Offenses. Chapter 12. Offenses Against Public Health and Morals. Article 1. General Provisions.

[5] American Prosecutors Research Institute. Animal Cruelty Prosecution: Opportunities for Early

Response to Crime and Interpersonal Violence. American Prosecutors Research Institute Special Topics Series, July 2006

[6] Luke, Carter, Jack Levin, and Arnold Arluke. Cruelty to Animals and Other Crimes: A Study by the MSPCA and Northeastern University. Massachusetts Society for the Prevention of Cruelty to Animals, 1997.

[7] Merkey, H. "Pain Terms: a list of definitions and notes on usage." Pain 6 (1979): 249-252. Note that the definition is expanded further in the original text, though it is usually this sentence that gets cited.

[8] Stasiak, Karen L., et al. "Species-specific assessment of pain in laboratory animals." Journal of the American Association for Laboratory Animal Science 42.4 (2003): 13-20.

[9] Liles, J. H., et al. "Influence of oral buprenorphine, oral naltrexone or morphine on the effects of laparotomy in the rat." Laboratory animals 32.2 (1998): 149-161.

[10] Gillingham, Melanie B., et al. "A comparison of two opioid analgesics for relief of visceral pain induced by intestinal resection in rats." Journal of the American Association for Laboratory Animal Science 40.1 (2001): 21-26.

[11] Wall, Patrick D. "Defining pain in animals." Animal pain. New York: Churchill-Livingstone Inc (1992): 63-79.

[12] Major contenders here might be "because pain Feels bad" (so, the qualitative, subjective aspect of pain), "because I want it to stop" (pain as an example of interests), or "because pain implies a request for help" (pain as a social event), or some combination thereof.

Want to cite this post?

Zeller-Townson, R. (2013). Legal Pains. The Neuroethics Blog. Retrieved on

, from http://www.theneuroethicsblog.com/2013/06/legal-pains.html